This question is quite long, if you know how feature importance to tree based methods works i suggest you to skip to text below the image.

Feature importance (FI) in tree based methods is given by looking through how much each variable decrease the impurity of a such tree (for single trees) or mean impurity (for ensemble methods). I'm almost sure the FI for single trees it's not reliable due to high variance of trees mainly in how terminal regions are built. XGBoost is empirically better than single tree and "the best" ensemble learning algorithm so we will aim on it. One of advantages of using XGBoost is its regularization to avoid overfitting, XGBoost can also learn linear functions as good as linear regression or linear classifiers (see Didrik Nielsen). My trouble is about its interpretation has came up due to image bellow:

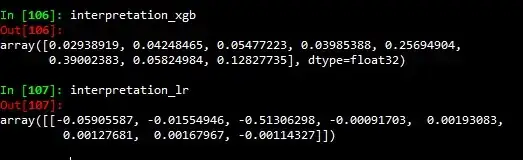

In upper side i've got the FI in XGBoost for each variable and below the FI (or coefs) in logistic regression model, i know that FI to XGB is normalized to ranges in 0-1 and logistic regression is not but the functions usually used to normalize something are bijective so it won't comprimise the comparation between the FI of two models, logistic regression got the same accuracy (~90) than XGB at cross validation and test set, note that the most three important variables in xgb are v5,v6,v8 (the importances are respective to variables) and in logistic model are v1,v2,v3 so it's totally different to the two models, i'm sure that the interpretation to logistic model is reliable so would xgboost interpretation not be reliable because this difference? if it wouldn't so it wouldn't only for linear situations or in general case?