Consider the problem stated as follows:

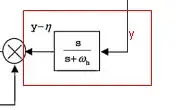

A signal y passes through a high pass filter $\frac{s}{s + ω }$. A high pass filter with cutoff frequency ω isolates the variations of this optimized variable from its average value. The state that represents the high pass filter is denoted by η. In the literature, the equation is represented as: $\frac{d η}{d t } = - ω η + ω y$.

My question is, which principle did they use to arrive at that equation. I know that the inverse Laplace transform of $\frac{s}{s + ω }$. will result in a differential equation, as it is being multiplied by s. However, I don’t know how to compute $\frac{s}{s + ω } * y$.

I have attached a screenshot of the control diag ram. I will really appreciate it if someone can please explain this concept to me.

ram. I will really appreciate it if someone can please explain this concept to me.