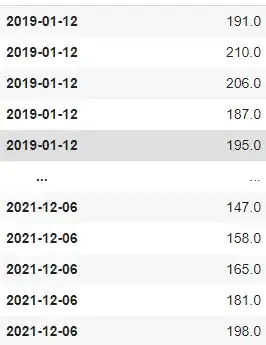

I am using an LSTM model to predict the next measurement of a sensor.

There are approximately 13000 measurements.

My code for the LSTM looks as follows:

def create_dataset(dataset, look_back=6):

dataX, dataY = [], []

for i in range(len(dataset)-look_back-1):

a = dataset[i:(i+look_back), 0]

dataX.append(a)

dataY.append(dataset[i+look_back, 0])

return numpy.array(dataX), numpy.array(dataY)

-------------------------------------------------------------------------

numpy.random.seed(7)

dataset = new_df.values

dataset = dataset.astype('float32')

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

train_size = int(len(dataset) * 0.67)

test_size = len(dataset) - train_size

train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:]

-------------------------------------------------------------------------

look_back = 6

trainX, trainY = create_dataset(train, look_back)

testX, testY = create_dataset(test, look_back)

trainX = numpy.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1]))

testX = numpy.reshape(testX, (testX.shape[0], 1, testX.shape[1]))

-------------------------------------------------------------------------

model = Sequential()

model.add(LSTM(2, input_shape=(1, look_back)))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2)

-------------------------------------------------------------------------

trainPredict = model.predict(trainX)

testPredict = model.predict(testX)

trainPredict = scaler.inverse_transform(trainPredict)

trainY = scaler.inverse_transform([trainY])

testPredict = scaler.inverse_transform(testPredict)

testY = scaler.inverse_transform([testY])

I tried using different learning rates(0.01, 0.001, 0.0001), more layers (1-3) and/or more neurons(1-128), epochs(40-200), I changed the training size (67-80%). I tried changing the activation function on the Dense layer(sigmoid, relu), I used different optimizers(GSD, Adam, Adagrad). I added Dropout layers, but that didn't help in decreasing the RMSE.

The RMSE doesn't drop below 6.5, the lowest value seen with the following parameters:

- Train size = 0.8,

- LSTM Layers = 2,

- Epochs = 100,

- Neurons = 32,

- Look back = 10.

If anyone has any advice on how to get the RMSE to a lower value (as close to 1 as possible), I would love to hear it. I can share the dataset if needed for review.