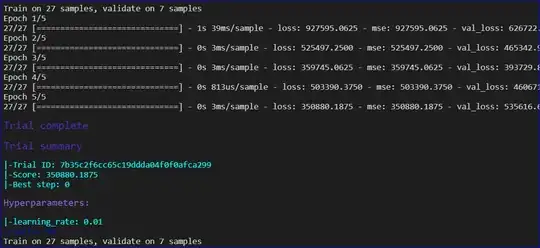

I am training an LSTM to predict a price chart. I am using Bayesian optimization to speed things slightly since I have a large number of hyperparameters and only my CPU as a resource.

Making 100 iterations from the hyperparameter space and 100 epochs for each when training is still taking too much time to find a decent set of hyperparameters.

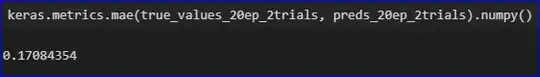

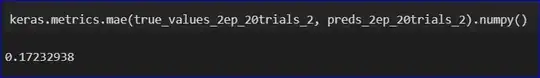

My idea is this. If I only train for one epoch during the Bayesian optimization, is that still a good enough indicator of the best loss overall? This will speed up the hyperparameter optimization quite a bit and later I can afford to re-train the best 2 or 3 hyperparameter sets with 100 epochs. Is this a good approach?

The other option is to leave 100 epochs for each training but decrease the no. of iterations. i.e. decrease the number of training with different hyperparameters.

Any opinions and/or tips on the above two solutions?

( I am using keras for the training and hyperopt for the Bayesian optimisation)