I'm having a hard time to interpret my result of the logistic regression.

I have a few question. Firstly, how can I check if a feature is more important to the others, like that there is a real significane by it.

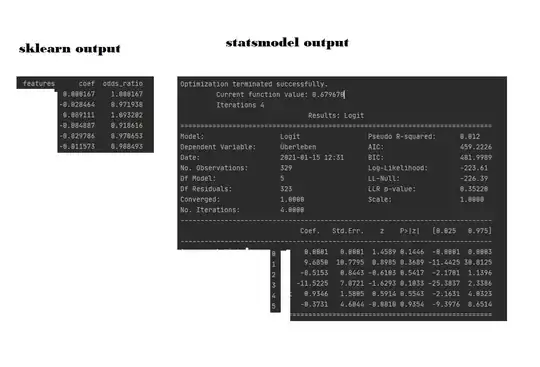

I have an accuray of 0.58 which is pretty bad. Anyway, why does the RFECV feature selector of sklearn tell me to use feature1 to get the best result but by training my model where I use hte statsmodels library, the model will all 5 variables is slightly better?

Also, why does my two models when I train it with sklearn and statsmodels is different in result?

It really confuses me everything. It would be nice if someone could tell me an easy way to interpret my results and do it in one library all.

My codesnippets

import statsmodels.api as sm

X = df_n_4[cols]

y = df_n_4['Survival']

# use train/test split with different random_state values

# we can change the random_state values that changes the accuracy scores

# the scores change a lot, this is why testing scores is a high-variance estimate

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2)

logit_model = sm.Logit(y_train, X_train).fit()

y_pred = logit_model.predict(X_test)

cf_matrix = confusion_matrix(y_test, y_pred.round())

sns.heatmap(cf_matrix, annot=True)

plt.title('Accuracy:{}'.format(accuracy_score(y_test, y_pred.round())))

plt.ylabel('Actual Szenario');

plt.xlabel('Predicted Szenario');

plt.show()

Part 2

X = df_n_4[cols]

y = df_n_4['Srvival']

# use train/test split with different random_state values

# we can change the random_state values that changes the accuracy scores

# the scores change a lot, this is why testing scores is a high-variance estimate

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2)

print(len(y_train)," Testdata")

# check classification scores of logistic regression

logreg = LogisticRegression()

logreg.fit(X_train, y_train)

y_pred = logreg.predict(X_test)

print('Train/Test split results:')

print(logreg.__class__.__name__+" accuracy is %2.3f" % accuracy_score(y_test, y_pred))

plt.title('Accuracy Score: {0}, Variablen: feature1'.format(round(accuracy_score(y_test, y_pred),2), size = 15))

cf_matrix = confusion_matrix(y_test, y_pred)

sns.heatmap(cf_matrix, annot=True)

plt.ylabel('Actual Szenario');

plt.xlabel('Predicted Szenario');

plt.show()

I don't have too much statistical background so please take it easy on me! I will provide any further information you need. Thanks so much I'm stuck since a week, as all I read and further do, confuses me even more.